Monitoring and constraining adaptive systems

``Where do you think you're going?'' Keeping a close eye on adapting systems.

This project (also see flyer) is defined within the scope of the Hybrid Intelligence: Augmenting Human Intellect project. It is focused on Adaptive and Responsible Hybrid Intelligence and is a collaboration between partners from Utrecht University, the Vrije Universiteit Amsterdam and the TU Delft.

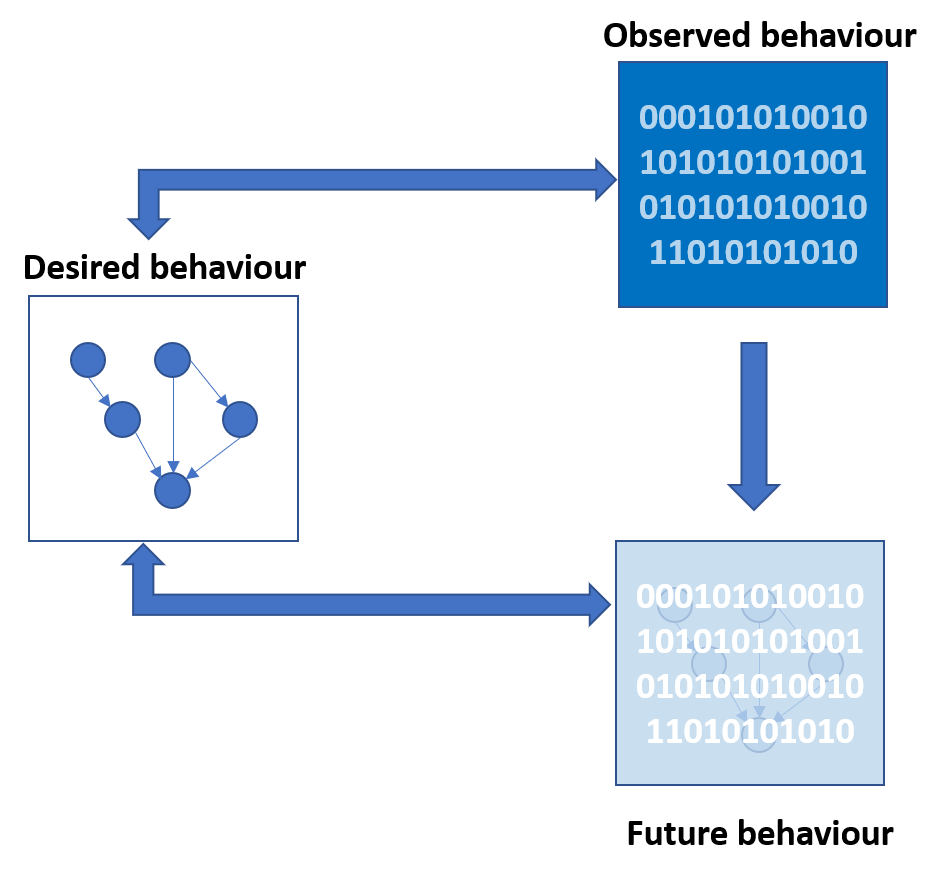

What is our aim?In the context of adaptive systems, we will design systems that allow for monitoring such systems in order to check if the adapting system obeys various types of constraint. Constraints may be the result of limited resources, laws and regulations, ethical or societal considerations, or any other type of constraint that is important for the environment at large in which the adaptive system operates. These constraints themselves may change over time: new laws may be introduced, safety regulations may become more strict, etc. The monitoring system will model these constraints in a human-intuitive way in order to facilitate updating the constraints by human agents, as well as to allow for explaining which constraints the adaptive system is or isn't obeying.

The monitoring system will be able to detect and react to violations of constraints, and preferably predict that violations are about to occur in order to warn the human agent about this well in advance. Either the human agent or the monitoring system can subsequently provide input to the learning algorithm of the adaptive system to steer the system back on track (automated steering will probably be beyond the scope of this project).

Why is this important?Since we allow an adaptive system to change itself, we need to trust that it does not evolve into a system that violates the constraints. A monitoring system can thus be used to certify that an adaptive system adheres to certain constraints. Moreover, since the monitoring system models the constraints in a human-intuitive way, these can easily be inspected and changed. Finally, being able to predict the behaviour of an adaptive system allows for analysing and explaining it. These aspects are all important to facilitate communication and collaboration between human and artificial adaptive agents.

How will we approach this?Our monitoring system will consist of the following components:

- A `normative' component that models the constraints that the adaptive system should obey. Depending on the type of constraint, these could be expressed in representations varying from pure logic-based to probabilistic-based. We will consider different existing representations and investigate their suitability for capturing different types of constraint.

- A `learning' component that learns and is able to predict the actual behaviour of the adaptive system with respect to the constraints laid down in the normative component.

For our components we will build on the following existing techniques: Bayesian networks; Sum-product networks; and Deep generative modelling (e.g., VAEs, flow-based models). The former two can be both learned and handcrafted and can therefore be used in either component. The Deep generative models allow for detecting out-of-distribution examples and can generate new samples that allow for predicting the adaptive system's behaviour. In addition, our system will have available a set of metrics to compare the actual and desired behaviour and decide whether or not a warning should be issued. We will investigate existing metrics and design new ones tailored to the task at hand.