Interactive obstruction-free lensing for volume data

Exploring a 3D scalar dataset (volume) can be hard. Consider for instance a medical 3D scan in which a small region of interest is deeply embedded in the volume. How to quickly and easily find such regions, isolate them from their surroundings, and visually study them?

Obstruction free lens

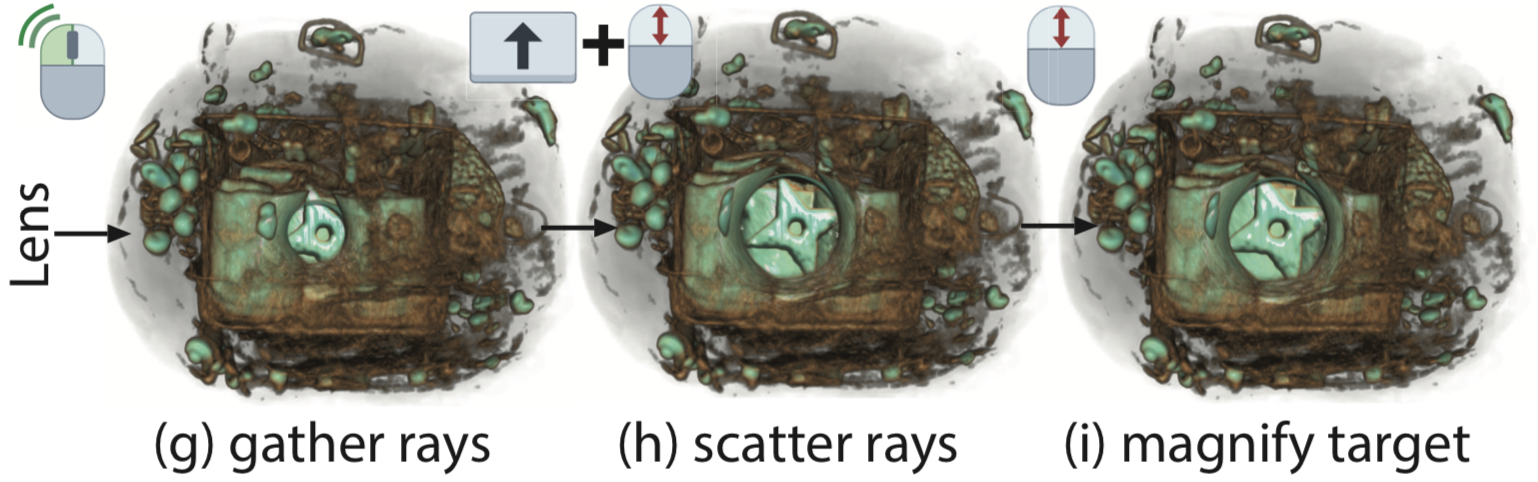

We developed a lensing tool that does precisely that. The user can point a virtual ray to any point/direction in the volume and deform the visualized data so that regions close to the ray are left in place, while the rest of the data is pushed away. This literally 'opens up' a hole in the volume to examine the region of interest.

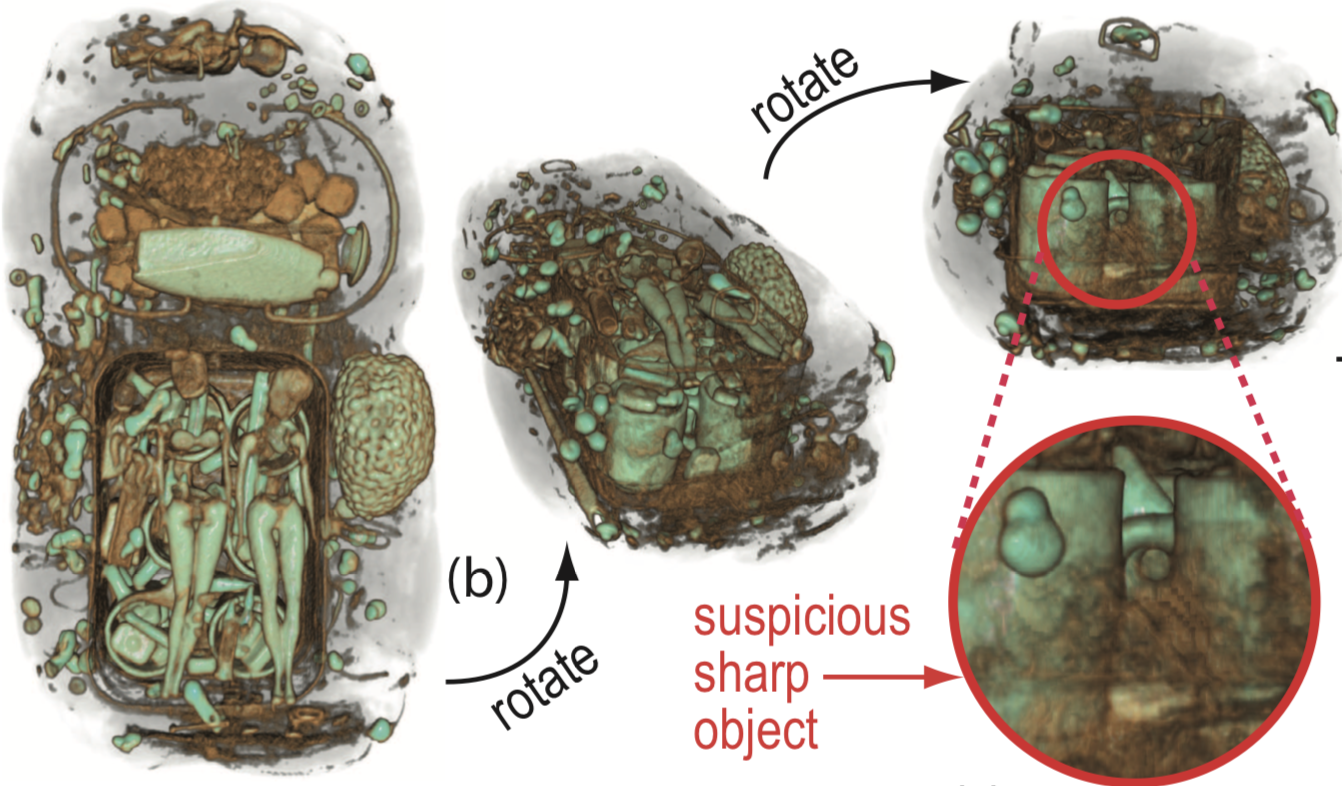

The image below shows a use case: Airport security officers look at a classical volumetric CT scan of a baggage and think that they spot a suspicious object (weapon). However, isolating this object in the scan is hard. How to get more insight?

The lens comes now into play: They point and the mouse at the suspicious object and click a button. A tunnel-like hole is gradually opened to expose a region in 3D around the clicked area. They can next interactively adjust the size of the hole and/or zoom in/out the area of interest. The object now appears to be a Ninja star weapon!

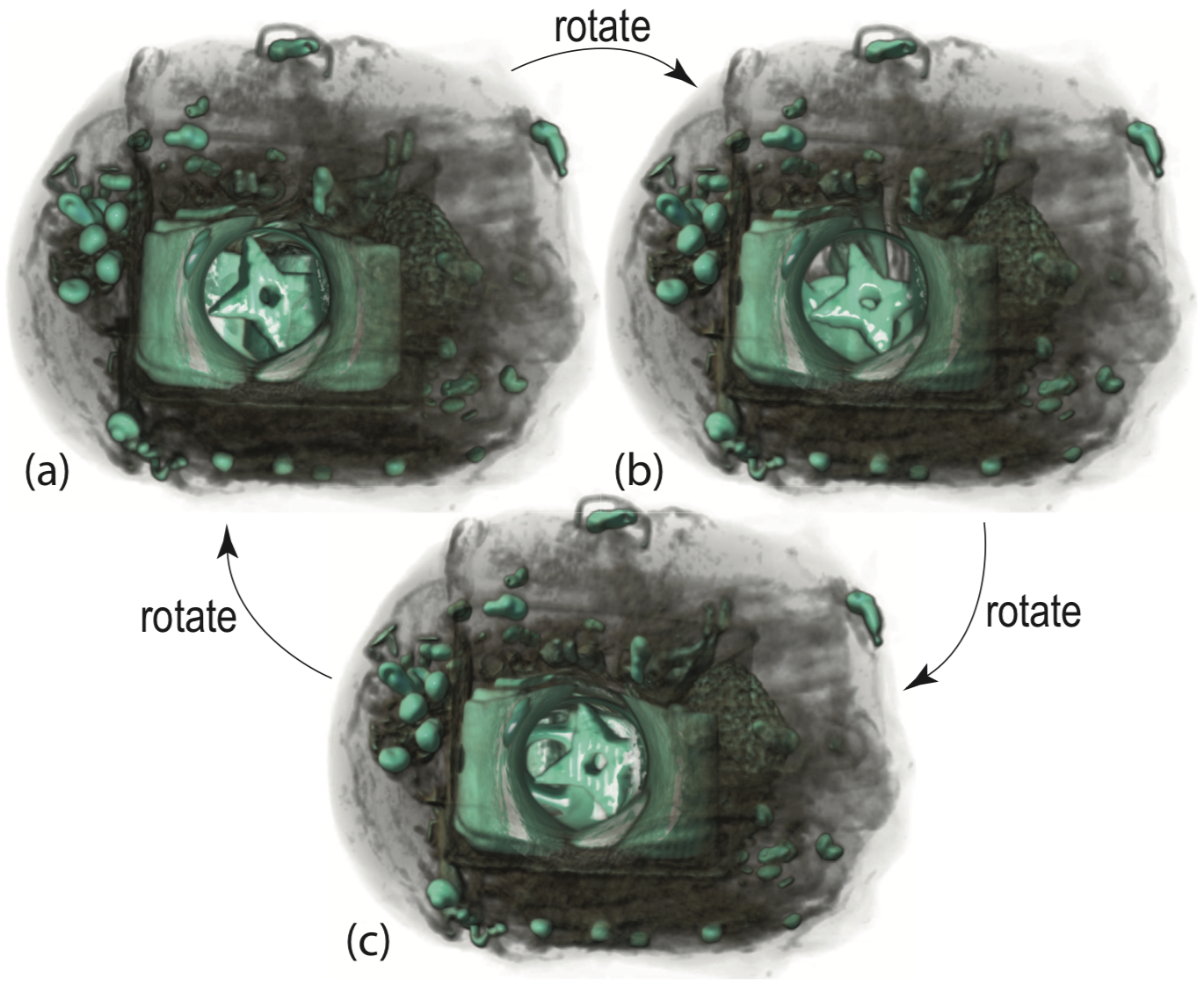

To get more insight, users can even virtually turn around the area in focus without changing the global viewpoint. The effect is as if the object of interest were turned inside the volume.

Applications

We validated the obstruction free lens on several datasets and use-cases (air traffic baggage control, lung CT diagnosis examination, 3D flow field exploration, and DTI exploration.

Video

See the video below that illustrates the working of the obstruction free lens.

Implementation

The obstruction free lens is simple to implement and can be added to any volumetric raycaster. Its GPU-based implementation allows interactive exploration framerates of volumes up to over 1000 voxels cubed.

Publications

Interactive obstruction-free lensing for volumetric data visualization M. Traore, C. Hurter, A. Telea. IEEE TVCG 25(1), 2019, pp. 1029-1039