Deep learning projections

Multidimensional projections are one of the best methods out there for visualizing high-dimensional data. They scale better than all other comparable methods (e.g., parallel coordinate plots, table lenses, scatterplot matrices), being able to depict datasets of millions of samples and thousands of dimensions compactly.

The world (or literature) knows tens of projection methods -- for a detailed survey, including how they perform, see here. This is a complex and messy landscape. There seems to be no method that can simultaneously

- handle big data in near-real time

- have out-of-sample capabilities (meaning: being robust for changes/additions to the data)

- work generically for any high-dimensional dataset

- have few, ideally no, tricky parameters the user must tune

- have a simple, open-source, implementation

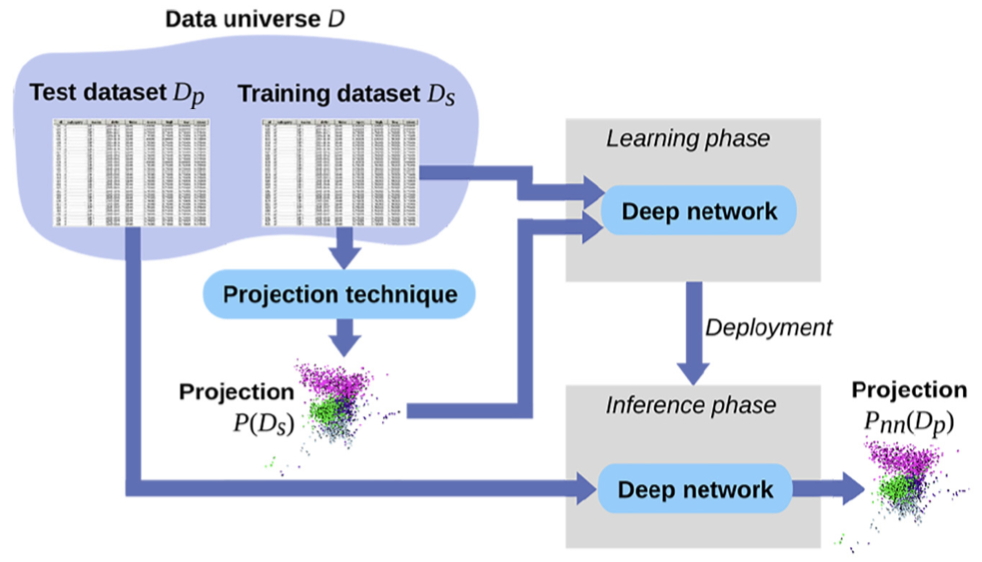

We propose a projection method meeting 'all' the above features, called Neural Network Projection (NNP). For this, we use deep learning (see image below): Given a dataset D, we select a small subset D_S_ thereof. We project D_S_ by any user-chosen method, whichever the user likes and finds suitable. Then, we train a neural network to learn the mapping from D_S_ to its projection. Next, we use the network to project the entire D, or any other similar datasets.

Results

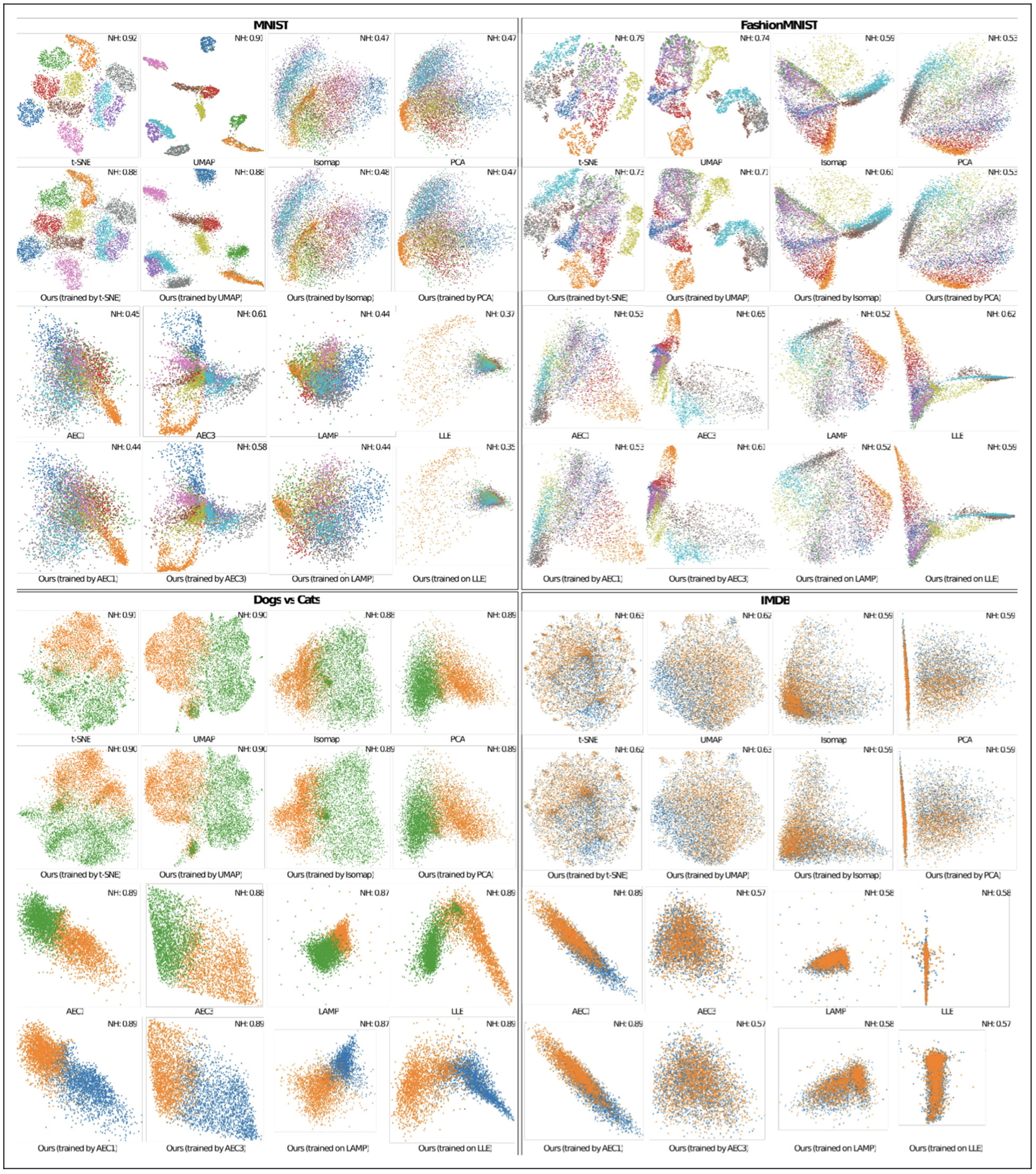

The image below shows several results of our method for several datasets (MNIST, Fashion MNIST, Dogs vs Cats, IMDB) and imitating several projection methods (t-SNE, UMAP, Isomap, PCA). We see that our method produces very similar results with all above-mentioned projection techniques on all datasets.

Out of sample ability

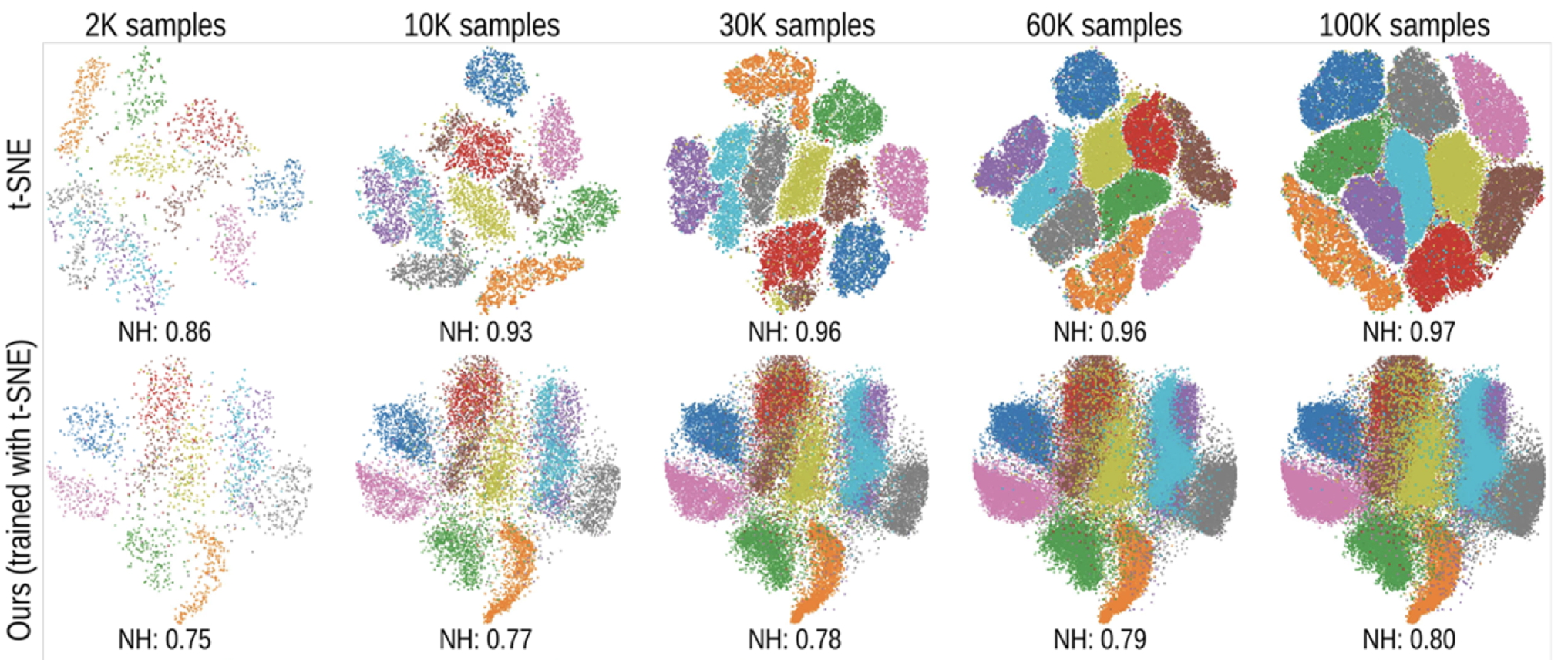

The image below shows the projection of the MNIST dataset (2K to 100K samples) by t-SNE and out method. We see how t-SNE wildly changes the projection for different sample counts, making it very hard for the user to maintain her mental map. In contrast, our method keeps the same clusters in the same place for all sample counts (the trade-off being that our method generates slightly fuzzier projections than t-SNE).

Scalability

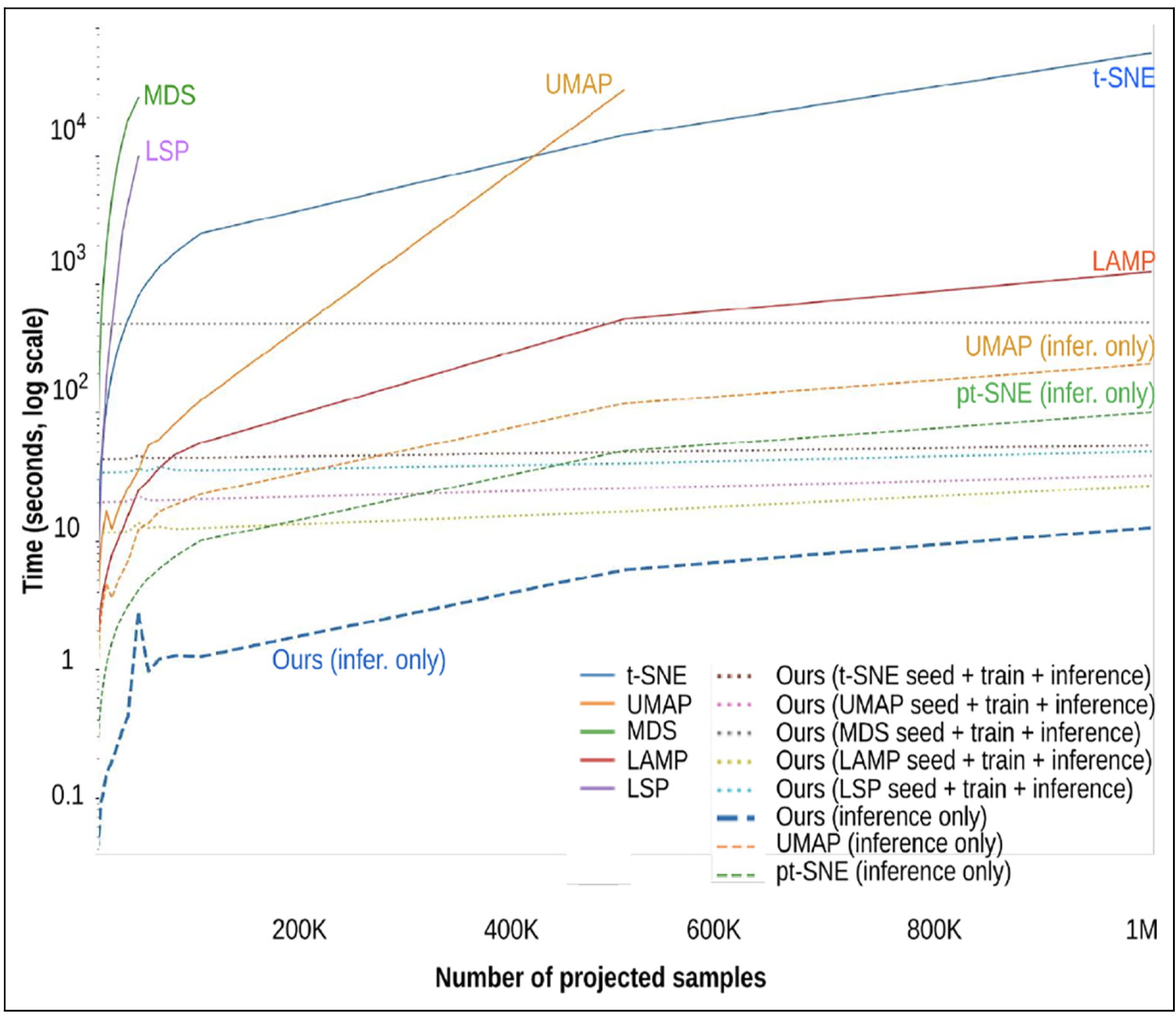

Here we excel, truly. The image below shows the speed of our method compared to other well-known projection methods for a dataset of 10K up to 1M samples. Our method is over three orders of magnitude faster than t-SNE, 100x faster than LAMP, and 10x faster than UMAP and parametric t-SNE. Other methods (LSP, UMAP, MDS) couldn't even complete the test.

Implementation

This is the elegant part: Nothing complex. Basic Python code using mainstream machine learning libraries. Open source code here. You just need a regular PC with a reasonably modern GPU to run all this.

References

Deep Learning Multidimensional Projections M. Espadoto, N. Hirata, A. Telea. Information Visualization, 2020